An interactive installation in which participants cooperate to solve augmented reality physics puzzles.

Introduction

Incredible Machine was the final year project for my Bachelor of Science in Creative Computing degree at Goldsmiths College and was awarded best project of the year. Using a combination of motion tracking, physics simulation and computer vision techniques, two or more participants collaborate to manipulate on screen objects to achieve a goal.

By playing this video you agree to the terms of the Vimeo privacy policy. Your activity may be tracked by Vimeo through the use of cookies.

Background

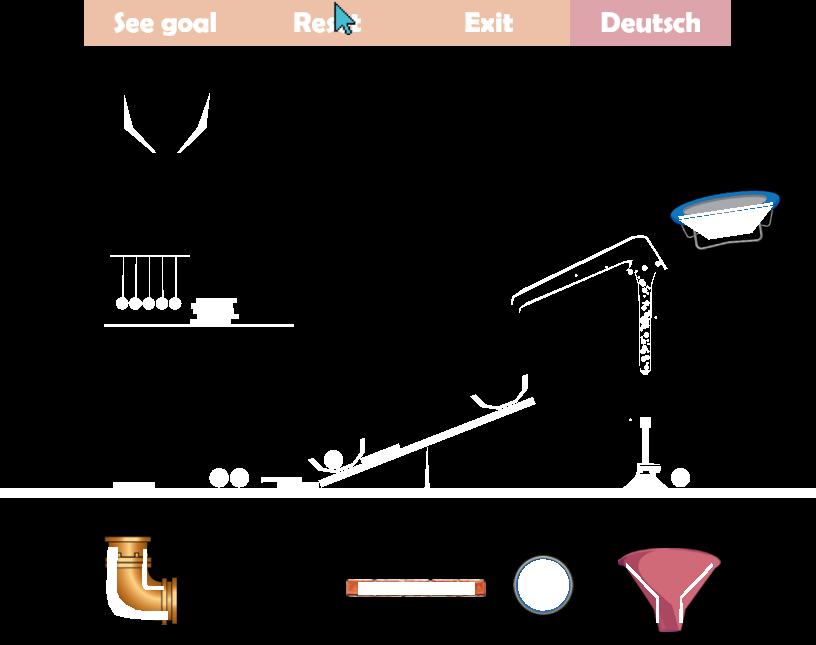

The concept for the game was heavily influenced by the game The Incredible Machine 2, made by Sierra, which I absolutely loved as a child. The game was initially developed to teach Newtonian physics to children, and explores basic principles of energy transformation. The player solves a series of puzzles by building complex "Rube Goldberg" style contraptions, where for example a mouse running on a mouse wheel provides the rotational energy to drive a conveyor belt, which in turn speeds a ball onto a flint stone, causing a spark that lights the fuse of a rocket. I was interested in adapting these puzzles to a mixed reality scenario, with a combination of physical and on-screen action.

Other ideas I wanted to explore included encouraging creativity in a public space, art as play, using gamification techniques to help people lose their self consciousness, and collaborative play as a way of building bonds between strangers.

Exploration

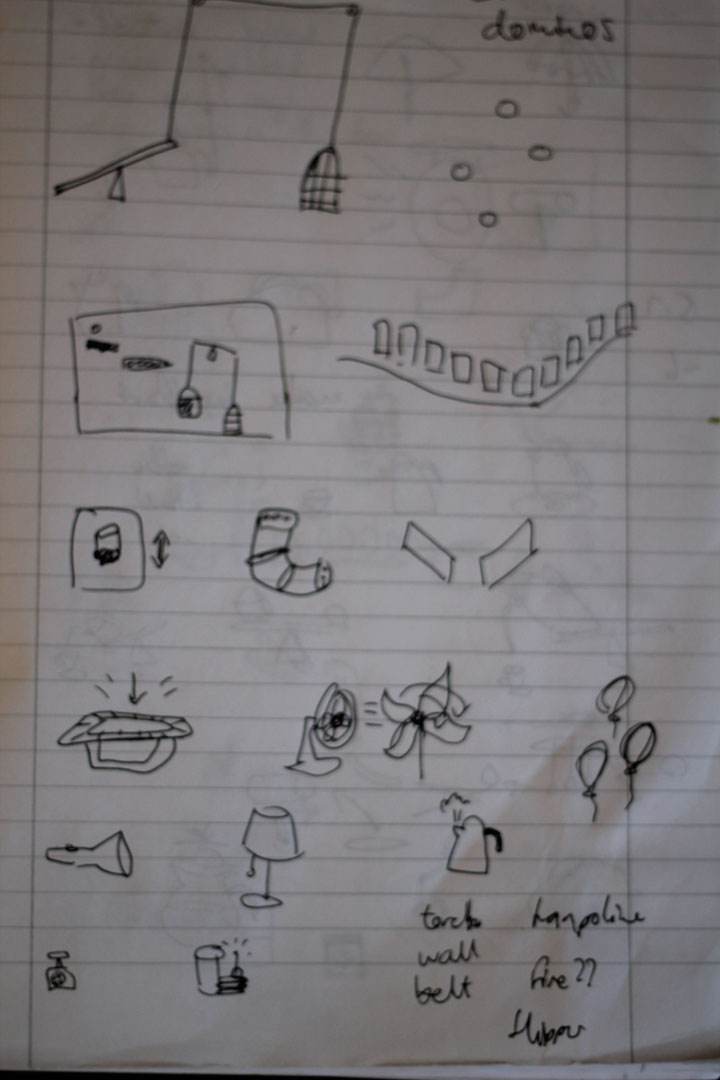

The first steps were exploring how I could develop a 2D physics world. I settled on using the Box2D engine, an open source physics engine that provided the backbone of the game Angry Birds. This was a new challenge and at first I attempted various experiments with a human-like on-screen "puppet" that was rendered in vvvv and controlled via body tracking with a Kinect. This proved problematic as Box2D doesn't really have any tools for modelling a self-supporting skeleton. Even with joints connecting the head, body, and limbs in all the right places, the puppet would simply collapse to the ground without constant intervention. I then attempted to "pin" the head and hands to the inputs received from the Kinect, but these would stutter and often the inputs could move faster than would be allowable in the physics world, causing elements to pass through each other (a hand through a wall, or a ball through an arm) in "impossible" ways. My solution was to use the Kinect points as sources of forces that would drag the puppet skeleton towards them. This created physically plausible movement, but was unresponsive and hard to control. Facing these challenges, I decided to abandon the puppet and track only one or two points for each player, using computer vision "fiducial markers" to represent different objects in the world. Fiducial markers are a set of designs that allow for computer object recognition in low resolution or bad lighting conditions.

Development

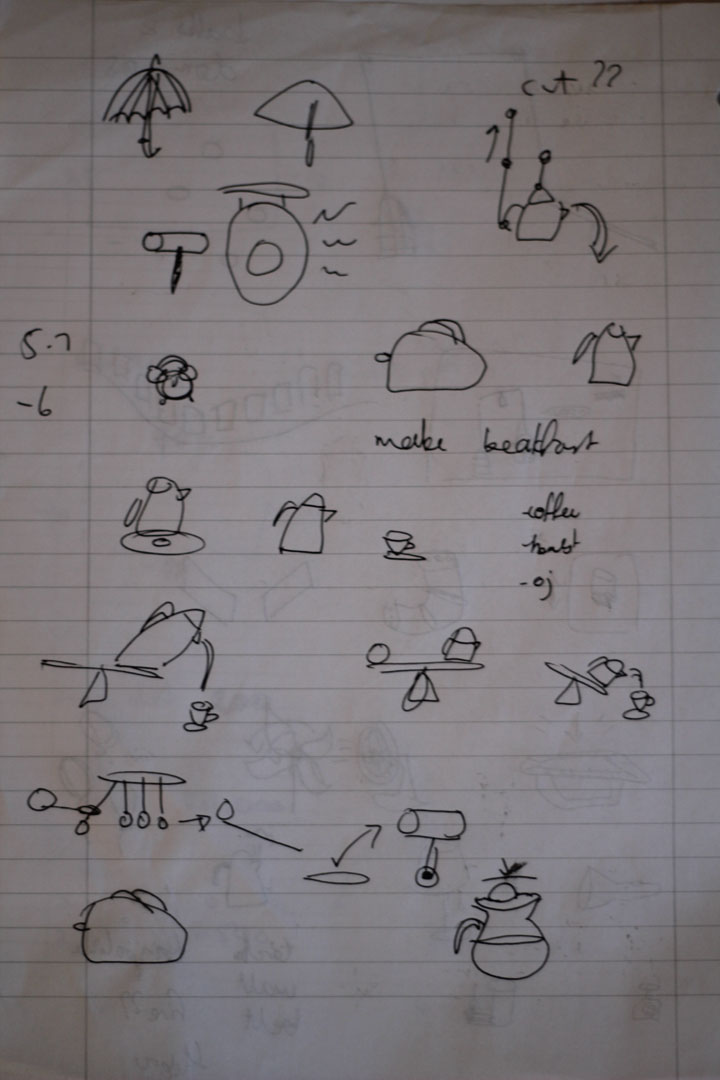

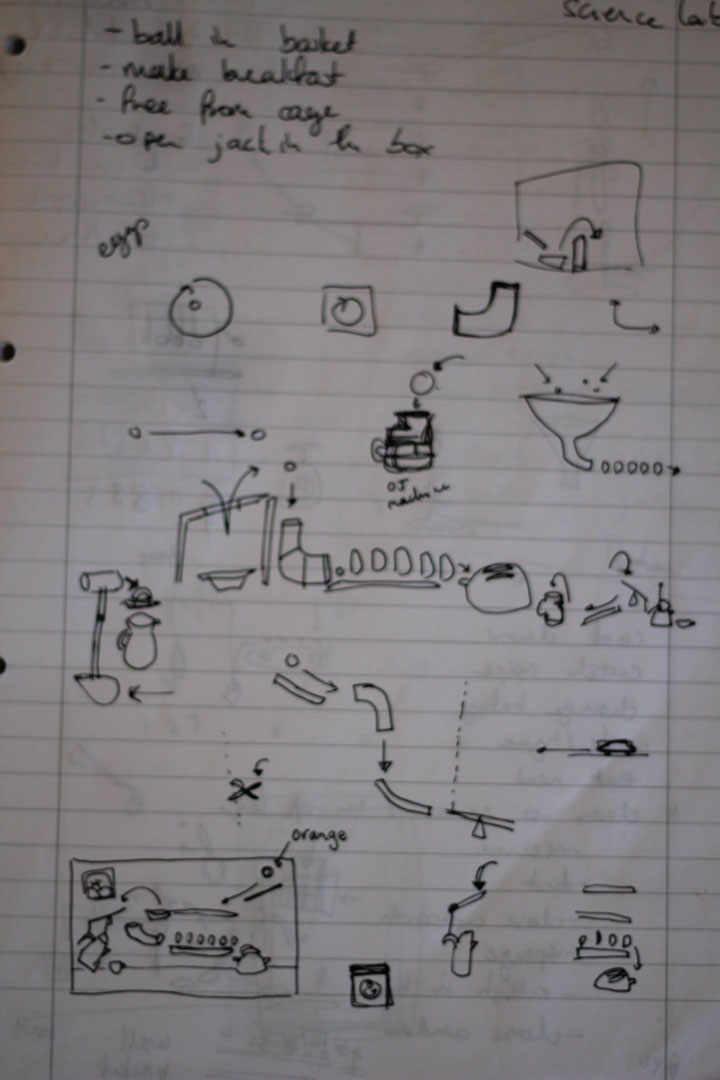

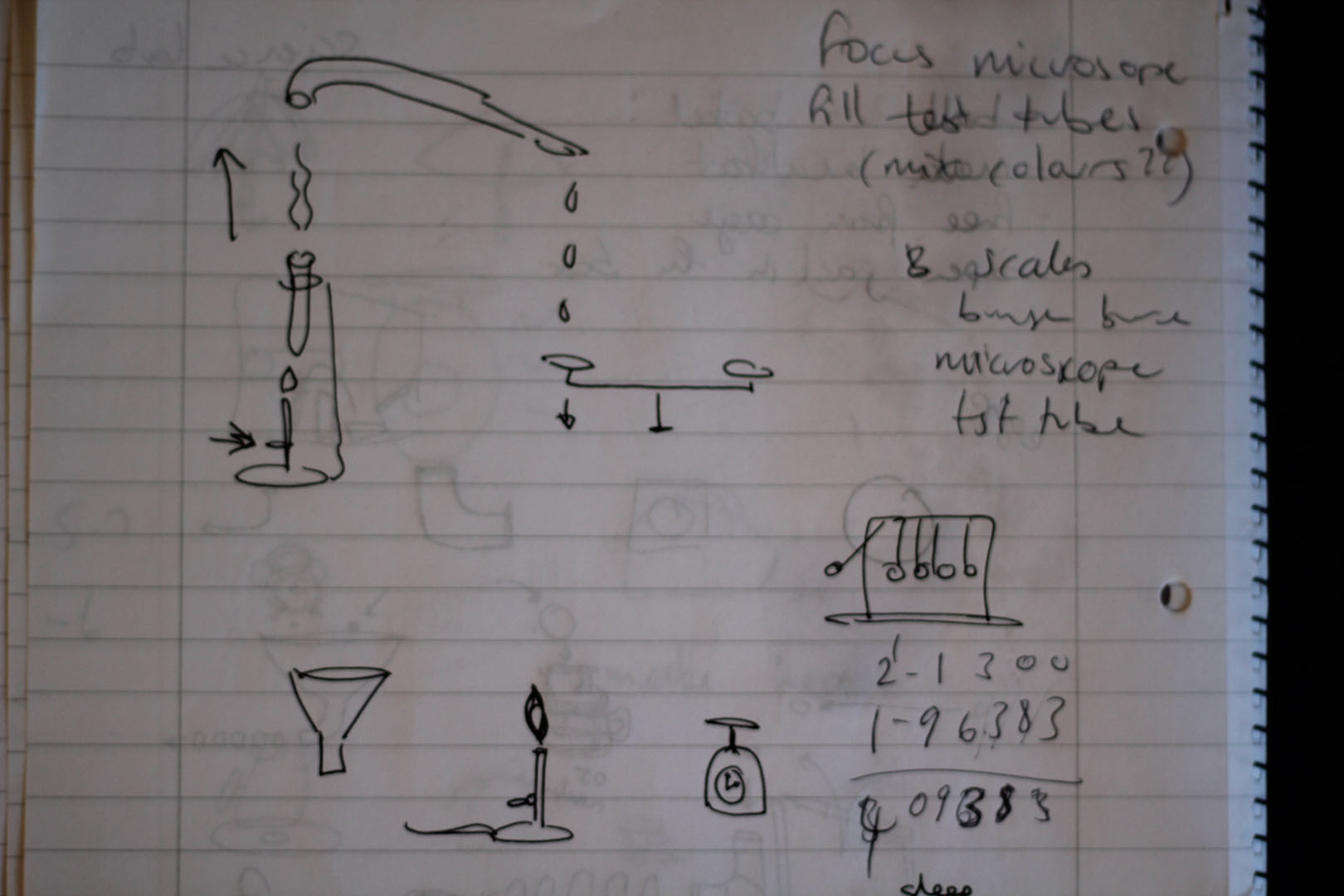

The next step was to design the game environment, that is the physical scenes and the control objects. I developed two scenes: a kitchen and a science laboratory. In the first setting the goal was to make toast and pour the kettle for tea. The boiling water was modeled with lots of small balls. In the laboratory setting the goal was to tip the scales, which involved boiling a test tube with a Bunsen burner.

To develop the environments I used the open source software RUBE Box2D Editor, which allows for a drag and drop visual interface for creating Box2D objects and the joints that connect them. RUBE then exports a JSON representation of those objects. I wrote a vvvv library to parse the JSON files and automatically create the objects and joints in the vvvv environment. From here it was a matter of "skinning" the world with designs, defining goals, and building the user interface. One of the biggest challenges I found was keeping track of and switching between game states (menu, in level, goal achieved, etc.), although this was largely due to my inexperience with vvvv as I later found out there is a node specifically for this purpose.

Presentation

The game was presented at a party in Galerie Heba with friends and neighbours. Around 30 people tried out the game, including children and adults.

Reflection

I was (and am) proud of how much I learnt on this project and that I was able to bring all the elements together into a working game that was pretty fun to play. It definitely encouraged interaction between strangers and people engaged cooperatively to solve the puzzles. However, I also see a lot to be critical of. I never really found a satisfactory solution for translating the computer vision input into the physics driven world of the game. Responses were laggy and unpredictable, and remained error-prone for large and rapid movements (objects would pass through other objects, or objects would disappear and reappear in different places). Later I also realised that less structured tasks can still use gamification techniques but allow for more creativity and self expression from the participants.

Technology

The scenes and objects were designed in RUBE Box2D Editor and imported into a vvvv patch using a vvvv library I developed. The vvvv patch manages the game environment and state, including the current level, language, and goal state. Players interact using cards with pictures of the on-screen objects printed on one side and fiducial markers on the other side. By moving these in front of a camera the computer is able to detect the marker location and the vvvv patch translates that into movement of the object on screen.

Installation

May 2015

Galerie Heba

Berlin, Germany

Role

Interaction design

Software development

Tools and Technology

Box2D physics

vvvv

Kinect

Fiducial markers